The boundary that separates legitimate from dubious academic journals—and the process by which they can rapidly descend from the former to the latter group—also serves as a cautionary case in point.

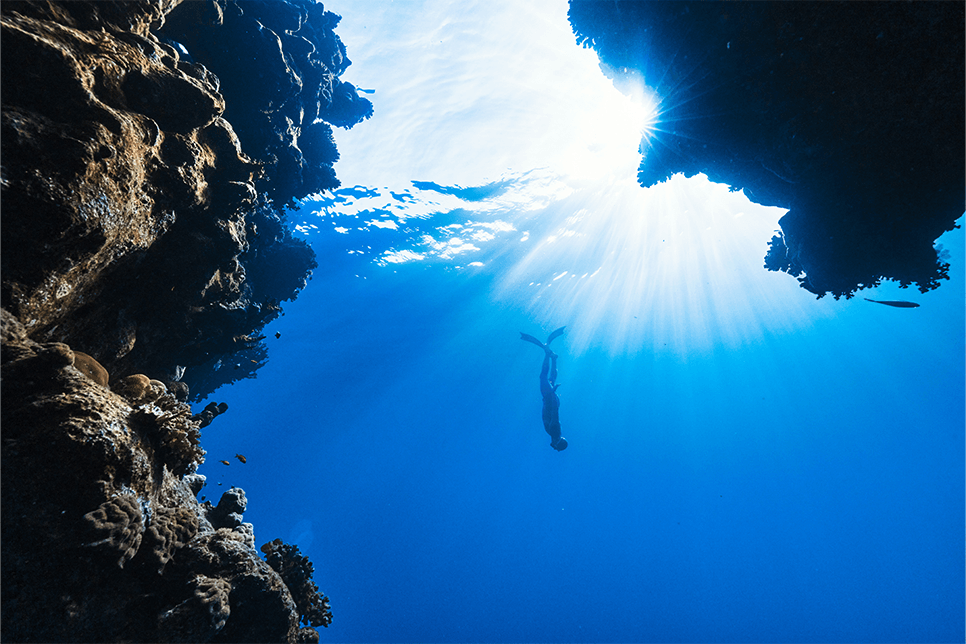

The integrity of scholarly research has always been one of the most—if not the most—important pillars of academic publishing. Authenticity, accuracy and reliability have always been the bedrock of the industry and a source of great pride for those working within it.

Remaining competitive in today’s complicated scholarly journal landscape has never been more challenging for publishers. Confronted with rapidly evolving business models, funding constraints, growing competition and stretched resources, sustaining and growing revenue can seem like a daunting task and uphill struggle at times.

For the last year and a half, the media and publishing world have generated a lot of buzz and discussion about one particular disruptive technology—artificial intelligence (AI).

Last month, customers, friends, and guest speakers from across the globe convened once again for our annual KGL PubFactory Virtual Series. During the course of the three-day event, a packed and topical agenda enabled the scholarly publishing community to share valuable insights, learnings, developments, advice and trends with their peers.

For Peer Review Week, KGL recently interviewed five scholarly journal editors and publishing professionals on the state of peer review in 2023.

During our recent community event, PubFactory Virtual Series: Industry Day, KGL’s Waseem Andrabi, VP of Learning Solutions, presented the different.

For Peer Review Week, KGL recently interviewed five scholarly journal editors and publishing professionals on the state of peer review in 2023.

Artificial Intelligence is everywhere, and whether we are aware of it or not, it has infiltrated every aspect of our daily lives.